Dataset

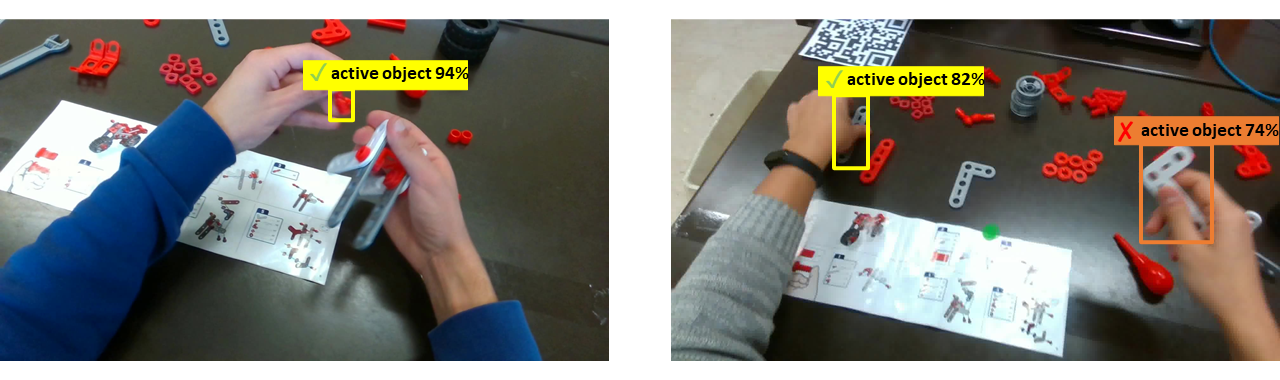

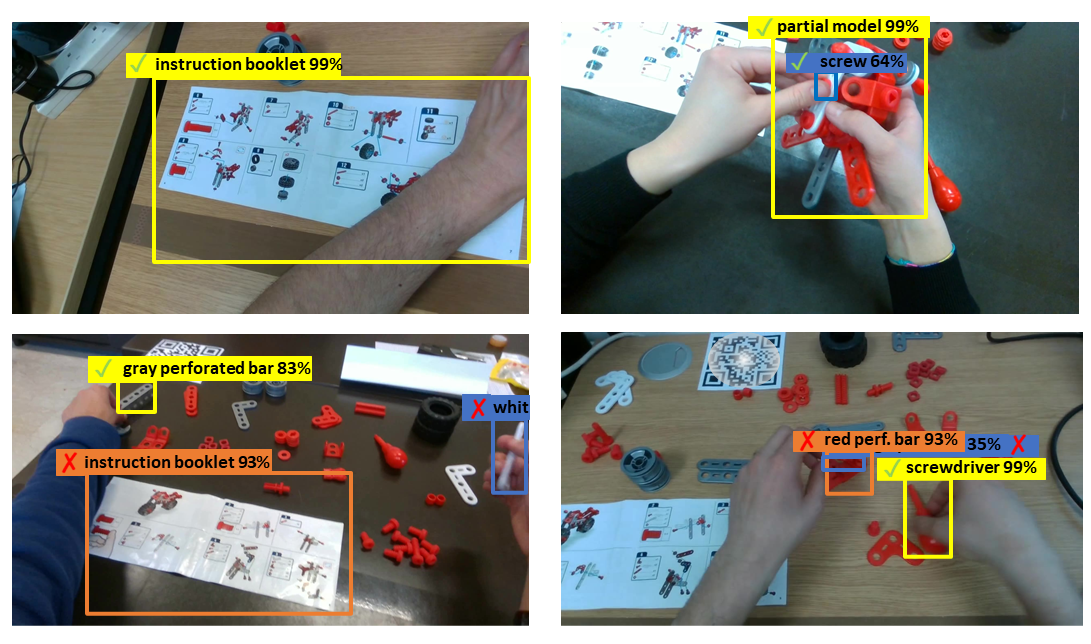

The MECCANO dataset has been acquired in an industrial-like scenario in which subjects built a toy model of a motorbike. We considered 20 object classes which include the 16 classes categorizing the 49 components, the two tools (screwdriver and wrench), the instructions booklet and a partial_model class.

Additional details related to the MECCANO:

- 20 different subjects in 2 countries (IT, U.K.)

- Video Acquisition: 1920x1080 at 12.00 fps

- 11 training videos and 9 validation/test videos

- 8857 video segments temporally annotated indicating the verbs which describe the actions performed

- 64349 active objects annotated with bounding boxes

- 12 verb classes, 20 objects classes and 61 action classes