EgoNature Dataset

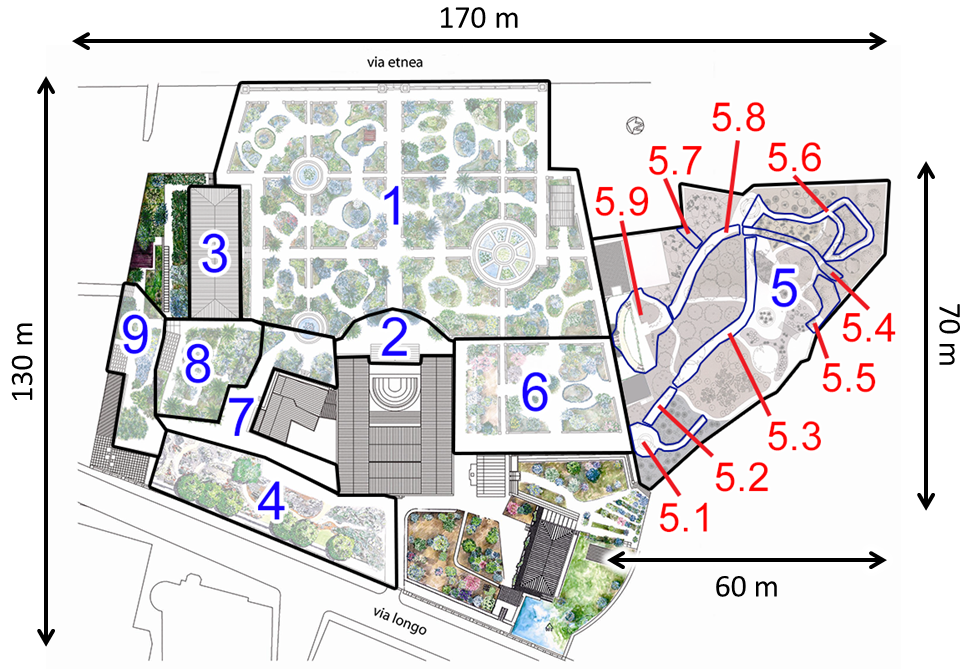

The dataset used in this work has been collected asking 12 volunteers to visit the Botanical Garden of the University of Catania. The garden has a length of about 170m and a width of about 130m. In accordance with experts, we defined 9 contexts and 9 Subcontexts of interest which are relevant to collect behavioral information from the visitors of the site. We asked each volunteer to explore the natural site wearing a camera and a smartphone during their visit. The wearable camera has been used to collect egocentric videos of the visits, while the smartphone has been used to collect GPS locations. GPS data has been later synced with the collected video frames.

We collected and labeled almost 6 hours of recording, from which we sampled a total of 63,581 frames for our experiments. The selected frames have been resized to 128x128 pixels to decrease the computational load.

For more details about our dataset go to this page .

Methods

We consider localizing the visitor of the natural site as a classification problem. In particular, we

investigate classification approaches based on GPS and images, as well as methods jointly exploiting both

modalities. Each of the considered methods is trained and evaluated on the proposed EgoNature dataset

according to the three defined levels of localization granularity: 9 Context, 9 Subcontext,

and 17 Subcontext.

Localization Methods Based on GPS: when GPS coordinates are available, localization can be performed

directly by analyzing such data. We employed different popular classifiers: Decision Classification Trees

(DCT), Support Vector Machines (SVM) with linear and RGB kernels and k-nearest neighbor (KNN). Each of the

considered approaches takes the raw GPS coordinates as input and produces a probability distribution over

the considered classes as output. We tune the hyperparameters of the classifiers performing a grid search

with cross validation. We remove duplicate GPS measurements from the training set when train GPS-based

methods, as we noted that this improved performance. Please note that, for fair comparison, duplicate

measurements are not removed at test time.

Localization Methods Based on Images: we consider three different CNN architectures: AlexNet,

Squeezenet and VGG16. The three architectures achieve different performances on the ImageNet classification

task and require different computational budgets. In particular, AlexNet and VGG16 require the use of a GPU

at inference time, whereas SqueezeNet is extremely lightweight and has been designed to run on CPU (i.e.,

can be exploited on mobile and wearable devices). We initialize each network with ImageNet-pretrained

weights and fine-tune each architecture for the considered classification tasks.

Localization Methods Exploiting Joinlty Images and GPS: images and GPS are deemed to carry

complementary information. Specifically, even inaccurate GPS measurements can be useful as a rough location

prior, while images can be used to distinguish neighboring areas characterized by different visual

appearance and geometry. We investigate how the complementary nature of these two signals can be leveraged

using late fusion during classification. Late fusion can be seen as a kind of weighted average between the

vectors of class scores produced by image- and GPS-based methods.

Paper

F. L. M. Milotta, A. Furnari, S. Battiato, G. Signorello, G. M. Farinella. Egocentric visitors localization in natural sites. Journal of Visual Communication and Image Representation, 2019. Download the paper here.

Patent

G. M. Farinella, G. Signorello, A. Furnari, S. Battiato, E. Scuderi, A. Lopes, L. Santo, M. Samarotto, G. P. A. Distefano, D. G. Marano, “Integrated Method With Wearable Kit For Behavioural Analysis And Augmented Vision" (Italian Version: "Metodo Integrato con Kit Indossabile per Analisi Comportamentale e Visione Aumentata"), Patent Application number 102018000009545, filling date: 17/10/2018, Università degli Studi di Catania, Xenia Gestione Documentale S.R.L., IMC Service S.R.L.