The Face Deepfake Detection Challenge

Journal of Imaging, 2022

Luca Guarnera 1, Oliver Giudice 2, Francesco Guarnera 1, Alessandro Ortis 1, Giovanni Puglisi 3, Antonino Paratore 4, Linh M. Q. Bui 5, Marco Fontani 5, Davide Alessandro Coccomini 6, Roberto Caldelli 7,8, Fabrizio Falchi 6, Claudio Gennaro 6, Nicola Messina 6, Giuseppe Amato 6, Gianpaolo Perelli 9, Sara Concas 9, Carlo Cuccu 9, Giulia Orrù 9, Gian Luca Marcialis 9, Sebastiano Battiato 1

1 Department of Mathematics and Computer Science, University of Catania, 95125 Catania, Italy

2 Applied Research Team, IT Department, Banca d’Italia, 00044 Rome, Italy

3 Department of Mathematics and Computer Science, University of Cagliari, 09124 Cagliari, Italy

4 iCTLab s.r.l., 95125 Catania, Italy

5 Amped Software, 34149 Trieste, Italy

6 ISTI-CNR, via G. Moruzzi 1, 56124 Pisa, Italy

7 National Inter-University Consortium for Telecommunications (CNIT), 43124 Parma, Italy

8 Faculty of Economics, Universitas Mercatorum, 00186 Rome, Italy

9 Department of Electrical and Electronic Engineering (DIEE), University of Cagliari, 09123 Cagliari, Italy

Contact Email: luca.guarnera@unict.it

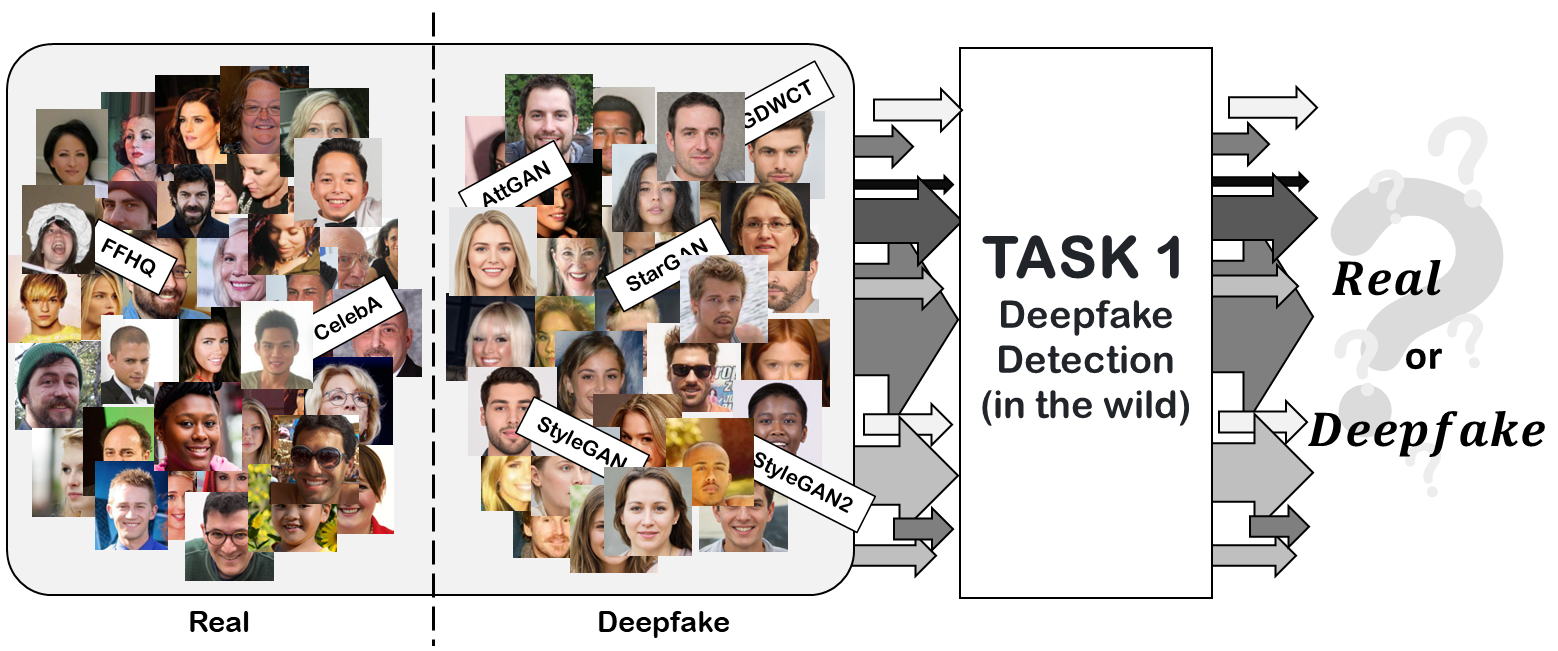

Task I: Deepfake detection task. Given a set of Real and Deepfake images created by different GAN engines, the objective is to create a detector able to correctly classify Deepfake images in any scenario.

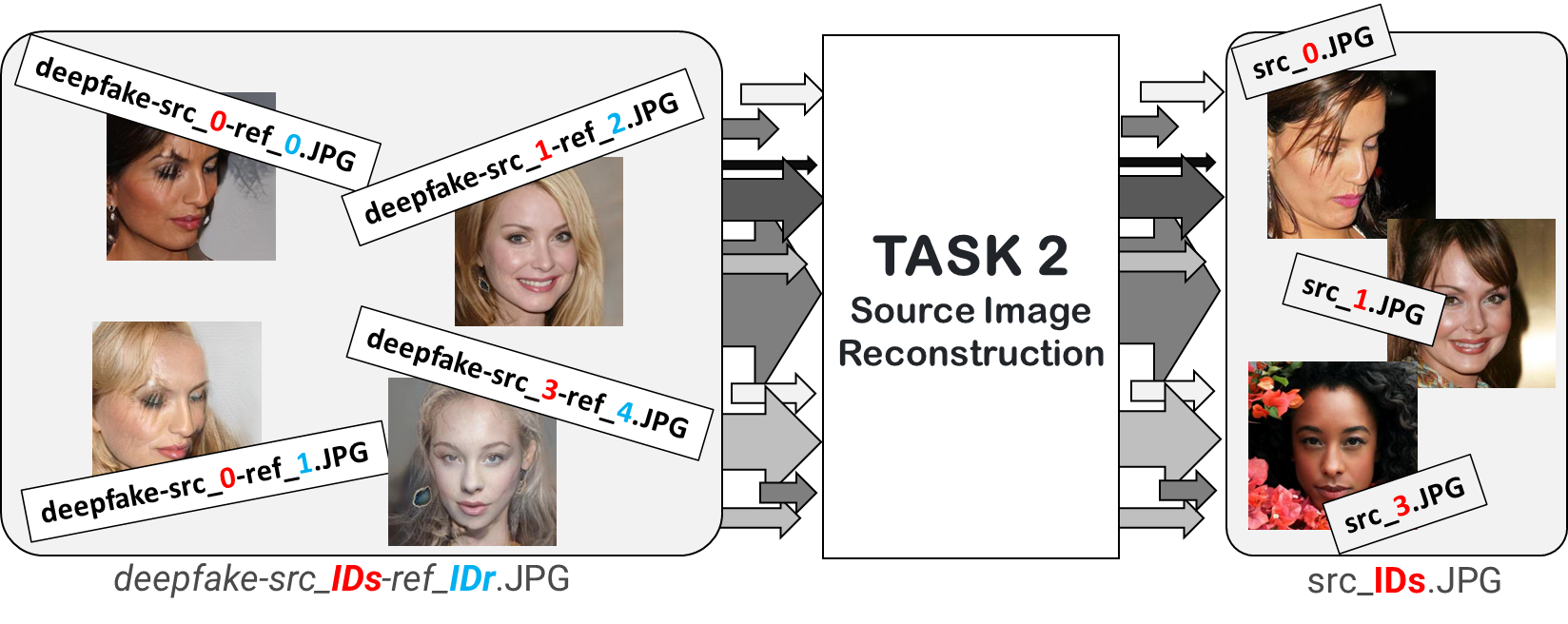

Task II: Source image reconstruction task.

ABSTRACT

Multimedia data manipulation and forgery has never been easier than today, thanks to the power of Artificial Intelligence (AI). AI-generated fake content, commonly called Deepfakes, have been raising new issues and concerns, but also new challenges for the research community. The Deepfake detection task has become widely addressed, but unfortunately, approaches in the literature suffer from generalization issues. In this paper, the Face Deepfake Detection and Reconstruction Challenge is described. Two different tasks were proposed to the participants: (i) creating a Deepfake detector capable of working in an “in the wild” scenario; (ii) creating a method capable of reconstructing original images from Deepfakes. Real images from CelebA and FFHQ and Deepfake images created by StarGAN, StarGAN-v2, StyleGAN, StyleGAN2, AttGAN and GDWCT were collected for the competition. The winning teams were chosen with respect to the highest classification accuracy value (Task I) and “minimum average distance to Manhattan” (Task II). Deep Learning algorithms, particularly those based on the EfficientNet architecture, achieved the best results in Task I. No winners were proclaimed for Task II. A detailed discussion of teams’ proposed methods with corresponding ranking is presented in this paper.

|

Download Paper |

Datasets Details

https://iplab.dmi.unict.it/Deepfakechallenge/"

Different Deepfake images were generated for the competition [WEB-PAGE].

As regards TASK 1: DEEPFAKE DETECTION TASK, two datasets of real face images were used for the employed experimental phase: CelebA and FFHQ. Different Deepfake images were generated

considering StarGAN, GDWCT, AttGAN, StyleGAN and StyleGAN2 architectures. For TASK 2: SOURCE IMAGE RECONSTRUCTION, deepfake images were created vy using StarGAN-v2 architecture.

DOWNLOAD DATASET

Portion of training set

TASK 1:

0-CelebA.zip, 0-FFHQ.zip, 1-ATTGAN.zip, 1-GDWCT.zip, 1-StarGAN.zip, 1-STYLEGAN.zip, 1-STYLEGAN2.zip.

TASK 2:

sources.zip, references.zip, deepfakes.zip.

Full training set

TASK 1:

0-CelebA.zip, 0-FFHQ.zip, 1-ATTGAN.zip, 1-GDWCT.zip, 1-StarGAN.zip, 1-STYLEGAN.zip, 1-STYLEGAN2.zip.

TASK 2:

sources.zip, references.zip, deepfakes.zip.

Test set

TASK 1:

test-task1.zip

Download Labels (each row of the label file contains the name of the image followed by the label)

Manipulation details

(each row contains the name of the image followed by a list of manipulations applied to the image. If the list is empty, then the image is not subject to attack, otherwise the manipulation type and its parameters are specified (e.g., JPEG compr: 84 represents JPEG compression with quality factor equal to 84; Rotation: 225 degrees represents applied rotation filter with degree value equal to 225; Gaussian: 9 represents Gaussian blur filter with kernel size equal to 9; etc.). For more details on the manipulation operations applied to images, please read the official paper.

TASK 2:

test-task2.zip

Download Labels (each row of the label file contains the name of the deepfake image followed by the name of the source image)

Source images are available here [DOWNLOAD FOLDER]

REFERENCES

- [1] Z. Liu, P. Luo, X. Wang and X. Tang, Deep Learning Face Attributes in the Wild, 2015 IEEE International Conference on Computer Vision (ICCV), 2015, pp. 3730-3738, doi: 10.1109/ICCV.2015.425.

- [2] https://github.com/NVlabs/ffhq-dataset, accessed on 03/11/2021.

- [3] Y. Choi, M. Choi, M. Kim, J. Ha, S. Kim and J. Choo, StarGAN: Unified Generative Adversarial Networks for Multi-domain Image-to-Image Translation, 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2018, pp. 8789-8797, doi: 10.1109/CVPR.2018.00916.

- [4] W. Cho, S. Choi, D. K. Park, I. Shin and J. Choo, Image-To-Image Translation via Group-Wise Deep Whitening-And-Coloring Transformation, 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 2019, pp. 10631-10639, doi: 10.1109/CVPR.2019.01089.

- [5] Z. He, W. Zuo, M. Kan, S. Shan and X. Chen, AttGAN: Facial Attribute Editing by Only Changing What You Want, in IEEE Transactions on Image Processing, vol. 28, no. 11, pp. 5464-5478, Nov. 2019, doi: 10.1109/TIP.2019.2916751.

- [6] T. Karras, S. Laine and T. Aila, A Style-Based Generator Architecture for Generative Adversarial Networks, 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 2019, pp. 4396-4405, doi: 10.1109/CVPR.2019.00453.

- [7] T. Karras, S. Laine, M. Aittala, J. Hellsten, J. Lehtinen and T. Aila, Analyzing and Improving the Image Quality of StyleGAN, 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 2020, pp. 8107-8116, doi: 10.1109/CVPR42600.2020.00813.

- [8] Y. Choi, Y. Uh, J. Yoo and J. -W. Ha, StarGAN v2: Diverse Image Synthesis for Multiple Domains, 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 2020, pp. 8185-8194, doi: 10.1109/CVPR42600.2020.00821.