Datasets

We built a dataset of egocentric videos acquired by a single user in five different personal locations: car, coffee vending machine, office, tv and home office. The dataset has been subsequently extended to 8 personal locations: car, coffee vending machine, office, tv, home office, kitchen, sink and garage. The considered personal locations arise from the daily activities of the user and are relevant to assistive applications such as quality of life assessment and daily routine monitoring. Given the availability of diverse wearable devices on the market, we use four different cameras in order to assess the influence of some device-specific factors, such as the wearing modality and the Field Of View (FOV), on the considered task.

Specifically, we consider the smart glasses Recon Jet (RJ), two ear-mounted Looxcie LX2, and a wide-angular chest-mounted Looxcie LX3. The Recon Jet and the Looxcie LX2 devices are characterized by narrow FOVs (70° and 65,5° respectively), while the FOV of the Looxcie LX3 is considerably larger (100°). One of the two ear-mounted Looxcie LX2 is equipped with a wide-angular converter, which allows to extend its Field Of View at the cost of some fisheye distortion. The wide-angular LX2 camera will be referred to as LX2W, while the perspective LX2 camera will be referred to as LX2P.

The technical specifications of the considered cameras are reported in the following:

| Camera | Resolution | Field Of View |

| Recon Jet | 1280 x 720 | 70° |

| Looxcie LX2 | 640 x 480 | 70° |

| Looxcie LX3 | 1280 x 720 | 70° |

In order to perform fair comparisons across the different devices, we built four independent, yet compliant, device-specific datasets. Each dataset comprises data acquired by a single device and is provided with its own training and test sets.

The training sets consist in short videos (about 10 seconds) of the personal locations of interest. During the acquisition of the training videos, the user turns his head (or chest, in the case of chest-mounted devices) in order to cover the most relevant views of the environment. A single video-shot per each location of interest is included in each training set.

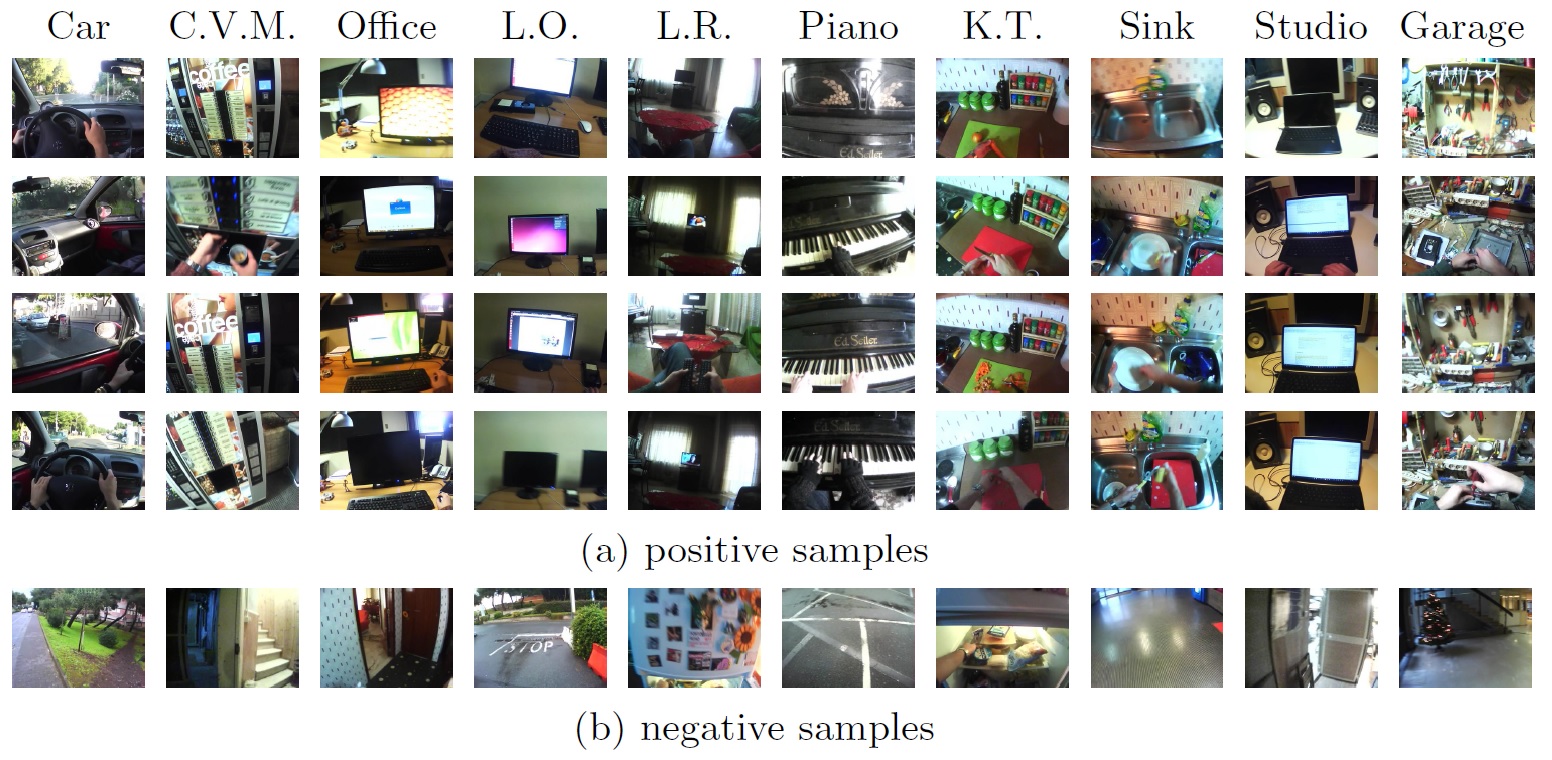

The test sets contain medium length videos (5 to 10 minutes) acquired by the user in the considered locations while performing normal activities related to the location of interest. Each test set comprises 5 videos for each location of interest. In order to gather likely negative samples, we acquired several short videos not representing any of the locations under analysis. The negative videos comprise indoor, outdoor scenes, other desks and other vending machines. The negative videos are divided into two separate sets: test negatives and "optimization" negatives. The role of the latter set of negative samples is to provide an independent set of data useful to optimize the parameters of the negative rejection methods. The overall dataset amounts to more than 20 hours of video and more than one million frames in total.

To ensure the repeatability of the results, we provide both the original (4 locations) and extended (8 locations) versions of the dataset, along with the details. The datasets consist in collections of frames sampled from the original videos. Access to the full length videos can be required writing an email to this address .

10-locations dataset [EPIC 2016]

This dataset is related to the paper "Temporal Segmentation of Egocentric Videos to Highlight Personal Locations of Interest" submitted to IEEE Transactions on Human-Machine Systems.

Assuming that the user is required to provide only minimal data to define his personal locations of interest, the training set consists in 10 short videos (one per each location) with an average length of 10 seconds per video. The validation set provides an independent set of images which can be used to check the generalization ability of the considered method. The test set consists in 10 video sequences covering the considered personal locations of interest, negative frames and transitions among locations. Each frame in the test sequences has been manually labeled as either one of the 10 locations of interest or as a negative. The validation set contains 10 medium length (approximately 5 to 10 minutes) videos of activities performed in the considered locations (one video per location). T he validation videos have been temporally subsampled in order to extract exactly 200 validation frames per-location, while all frames are considered from training and test videos. We have also acquired 10 medium length videos containing negative samples from which we uniformly extract 300 frames for training (in order to allow comparison with methods which use negatives for learning or optimization) and 200 frames for validation. The proposed dataset contains 2142 positive, plus 300 negative frames for training, 2000 positive, plus 200 negative frames for validation and 132234 mixed (both positive and negative) frames for testing purposes.

This amounts to a total of 133770 extracted frames to be used for experimental purposes. The dataset can be downloaded from the following links:

8-locations dataset [THMS 2016]

This dataset is related to the paper "Recognizing of Personal Locations from Egocentric Videos" IEEE Transactions on Human-Machine Systems.

At training time, all the frames contained in the 10-seconds video shots are used, while the test videos are temporally subsampled. In order to reduce the amount of frames to be processed (while still retaining some temporal coherence) for each location in the test sets, we extract 200 subsequences of 15 contiguous frames. The starting frames of the subsequences are uniformly sampled from the 5 videos available for each class. The same subsampling strategy is applied to the test negatives. We also extract 300 frames form the optimization negative videos.

This amounts to a total of 133770 extracted frames to be used for experimental purposes. The dataset can be downloaded from the following links:

5-contexts dataset [ACVR 2015]

This dataset is related to the paper "Recognizing Personal Contexts from Egocentric Images" published in the third workshop on Assistive Computer Vision and Robotics in conjunction with ICCV 2015.

At training time, all the frames contained in the 10-seconds video shots are used, while at test time, only about 1000 frames per-class extracted from the testing videos are used.

The dataset available here contains the extracted frames to ensure repeatability of the experiments. Each device-specific dataset contains about 6000 frames (1000 per each class, plus 1000 for the negatives) for testing and about 1000 frames for training (200 frames per-location in average). The dataset can be downloaded from the following link: