Vito Santarcangelo, Giovanni Maria Farinella, Sebastiano Battiato

European Conference on Computer Vision Workshop – EPIC

Download Paper

Abstract

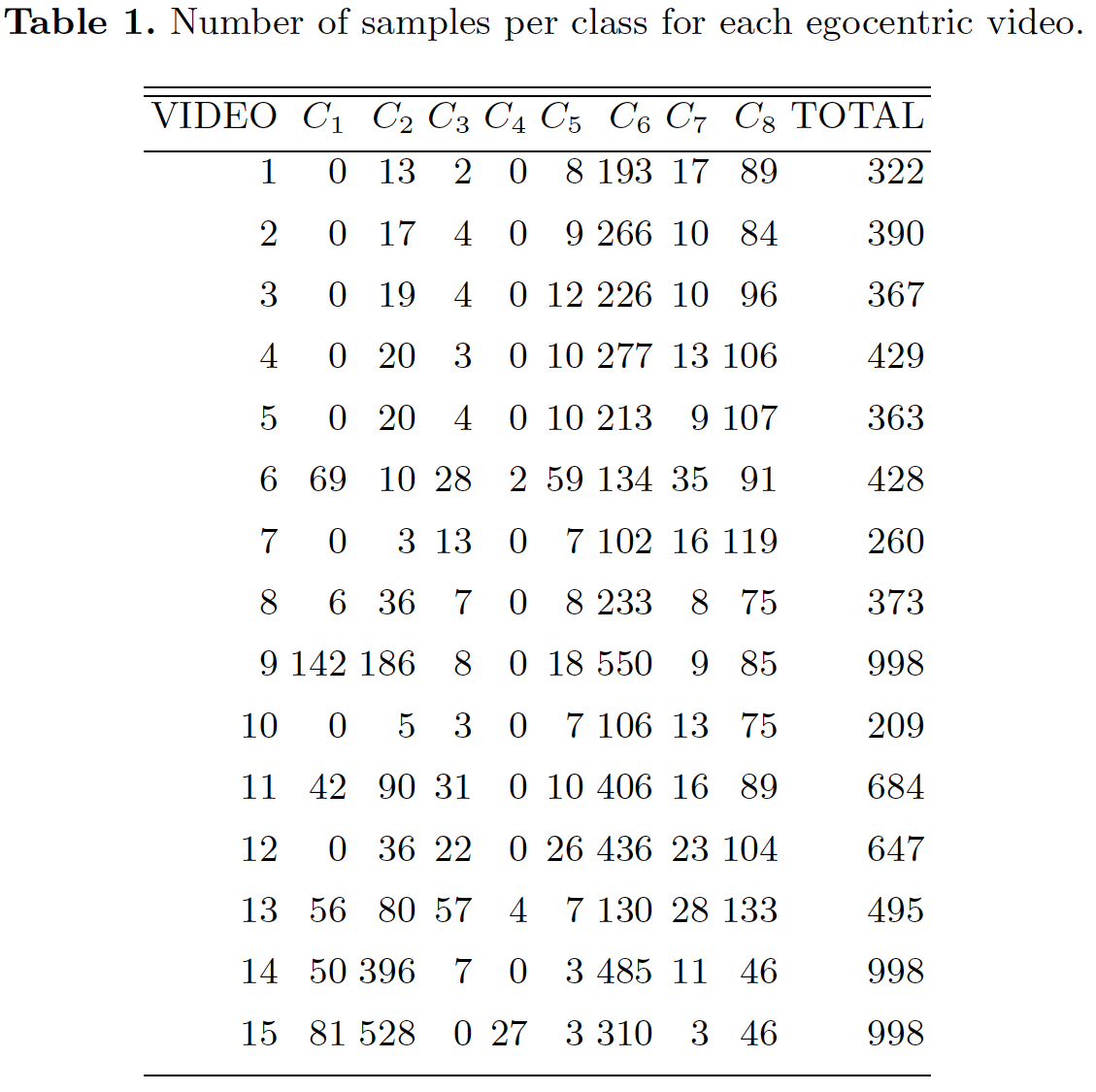

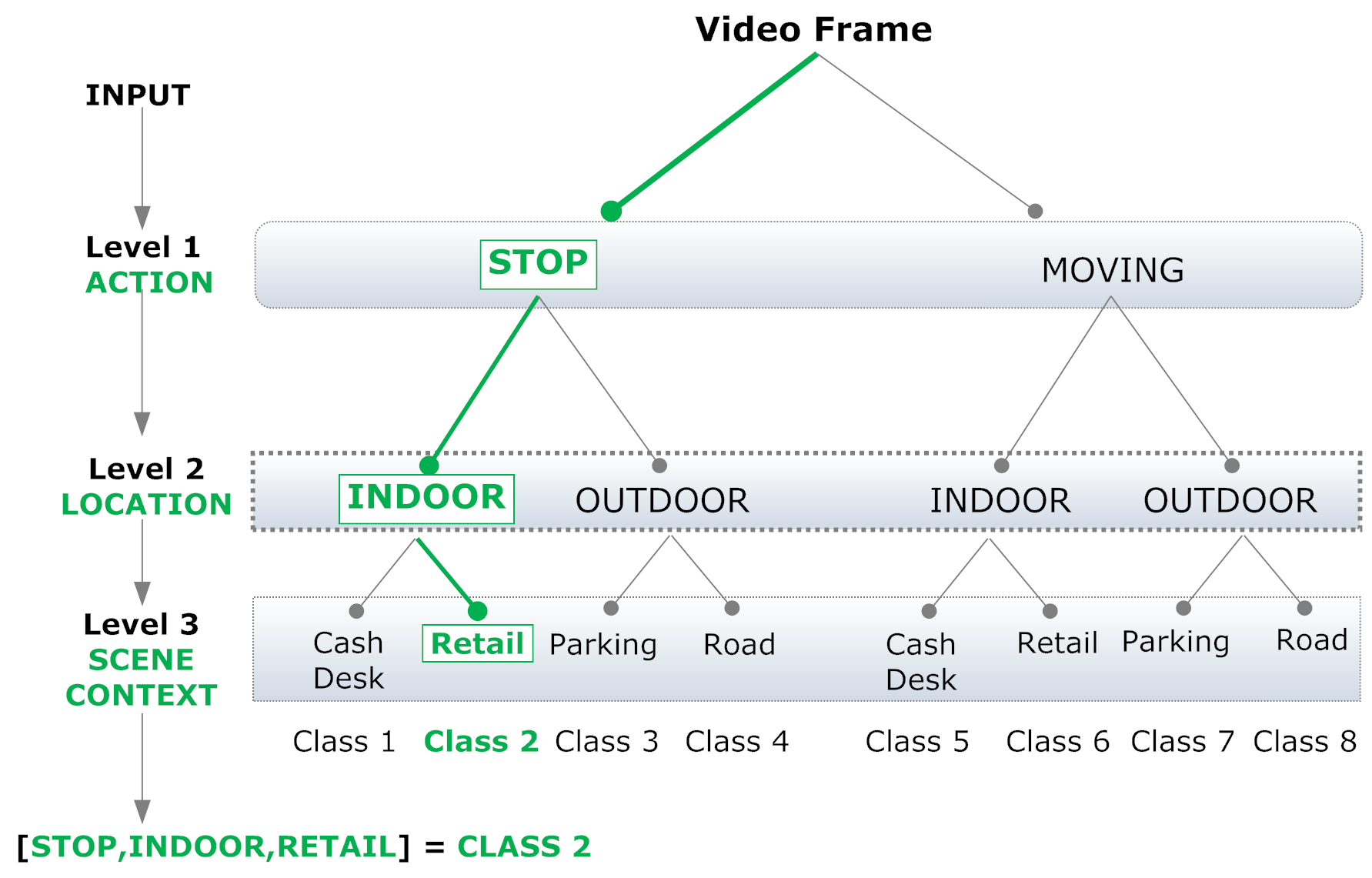

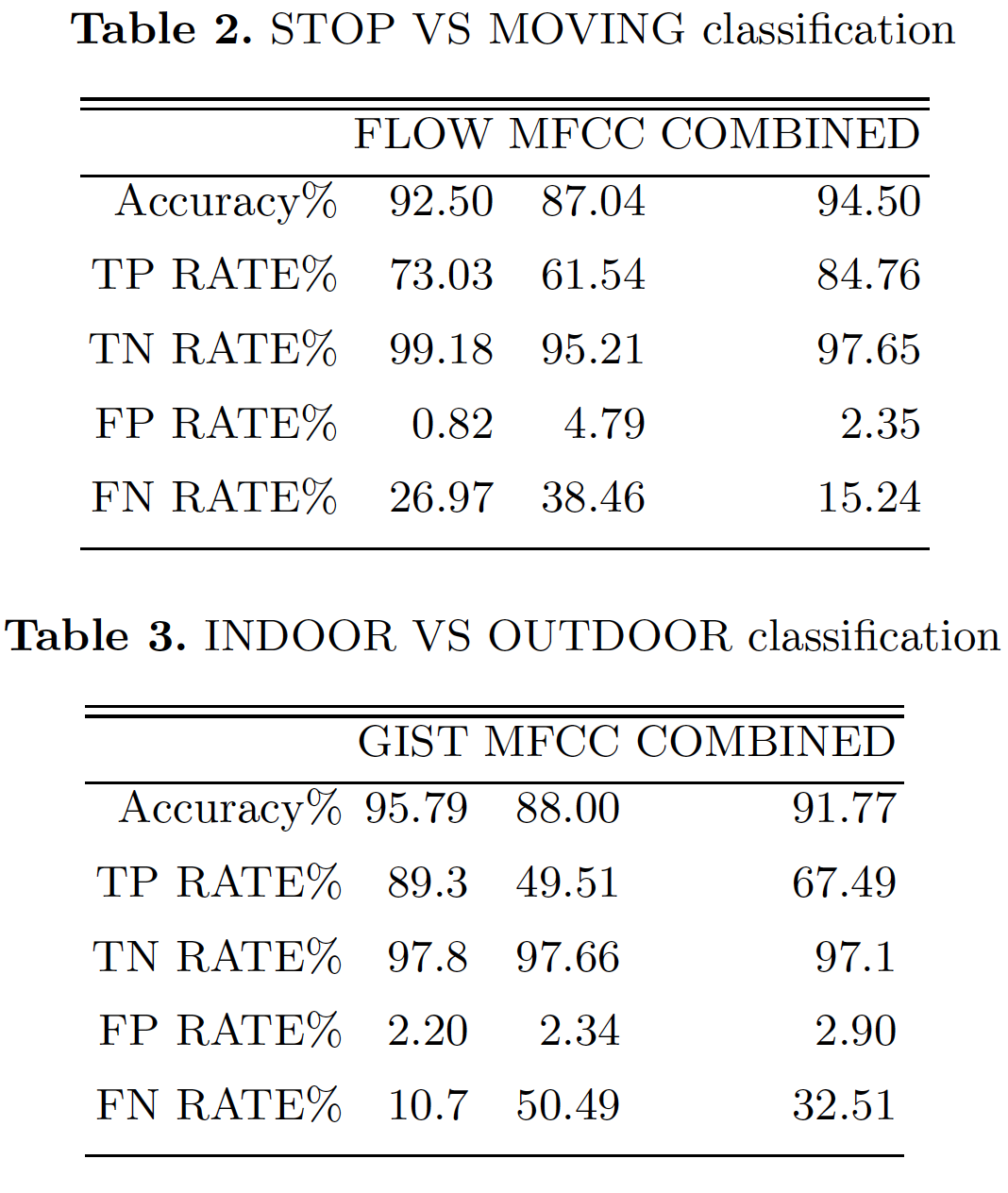

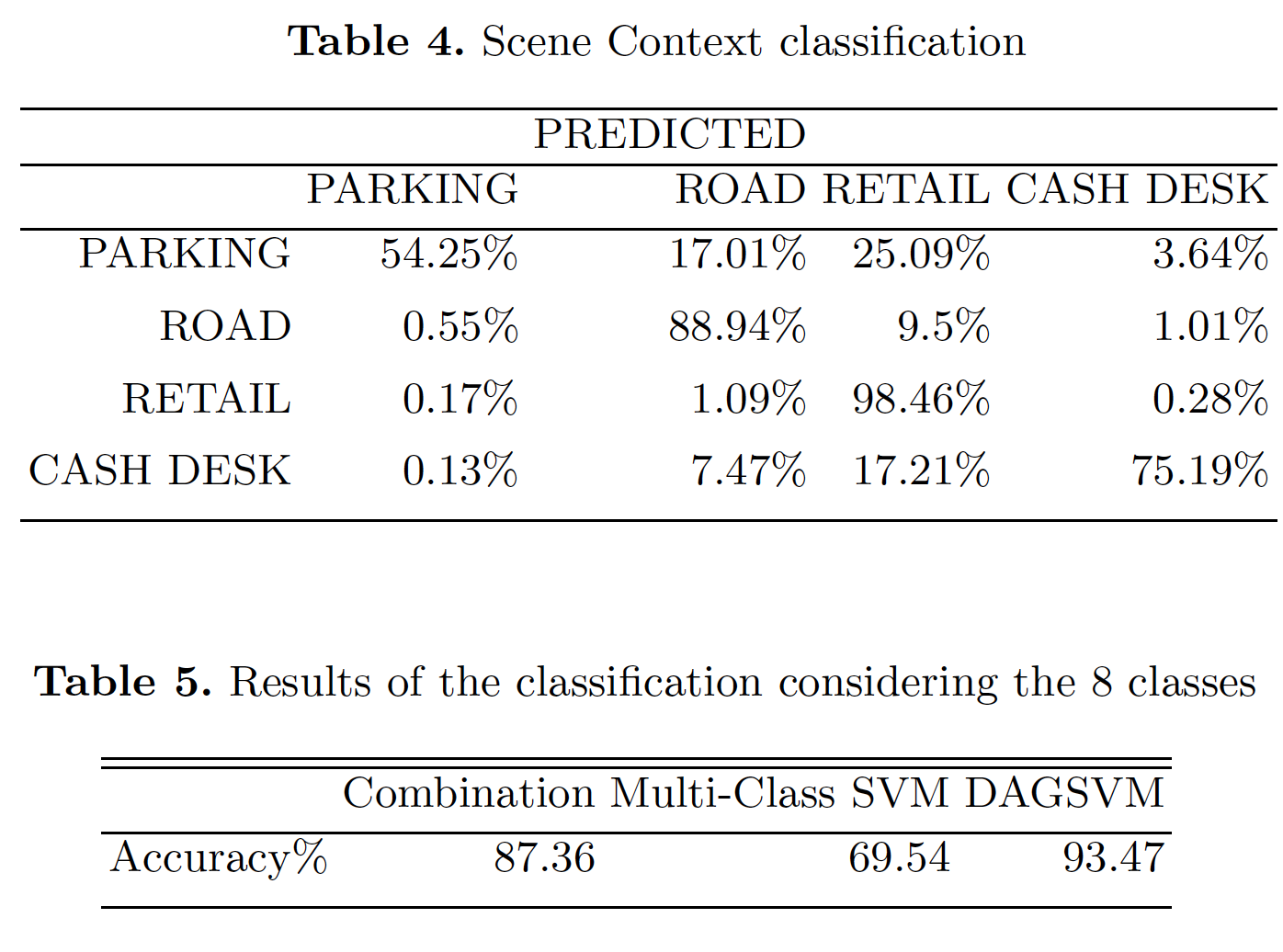

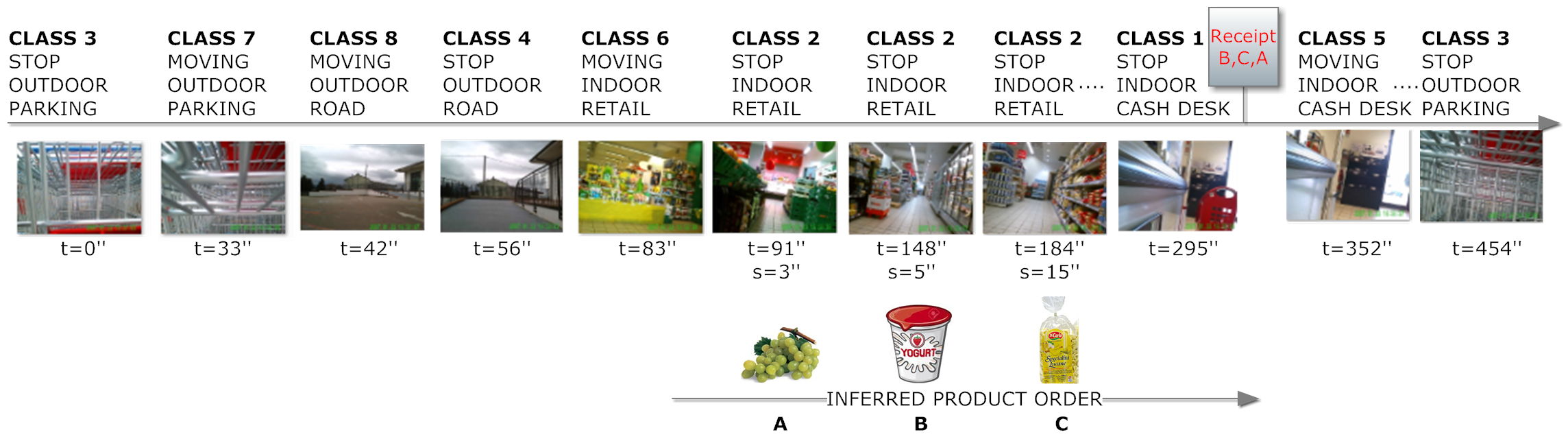

This paper introduces a new application scenario for egocentric vision: Visual Market Basket Analysis (VMBA). The main goal in the proposed application domain is the understanding of customers behaviors in retails from videos acquired with cameras mounted on shopping carts (which we call narrative carts). To properly study the problem and to set the first VMBA challenge, we introduce the VMBA15 dataset. The dataset is composed by 15 different egocentric videos acquired with narrative carts during users shopping in a retail. The frames of each video have been labeled by considering 8 possible behaviors of the carts.The considered cart’s behaviors reflect the behaviors of the customers from the beginning (cart picking) to the end (cart releasing) of their shopping in a retail. The inferred information related to the time of stops of the carts within the retail, or to the shops at cash desks could be coupled with classic Market Basket Analysis information (i.e., receipts) to help retailers in a better management of the spaces. To benchmark the proposed problem on the introduced dataset we have considered classic visual and audio descriptors in order to represent video frames at each instant. Classification has been performed exploiting the Directed Acyclic Graph SVM learning architecture. Experiments pointed out that an accuracy of more than 90% can be obtained on the 8 considered classes.