Method

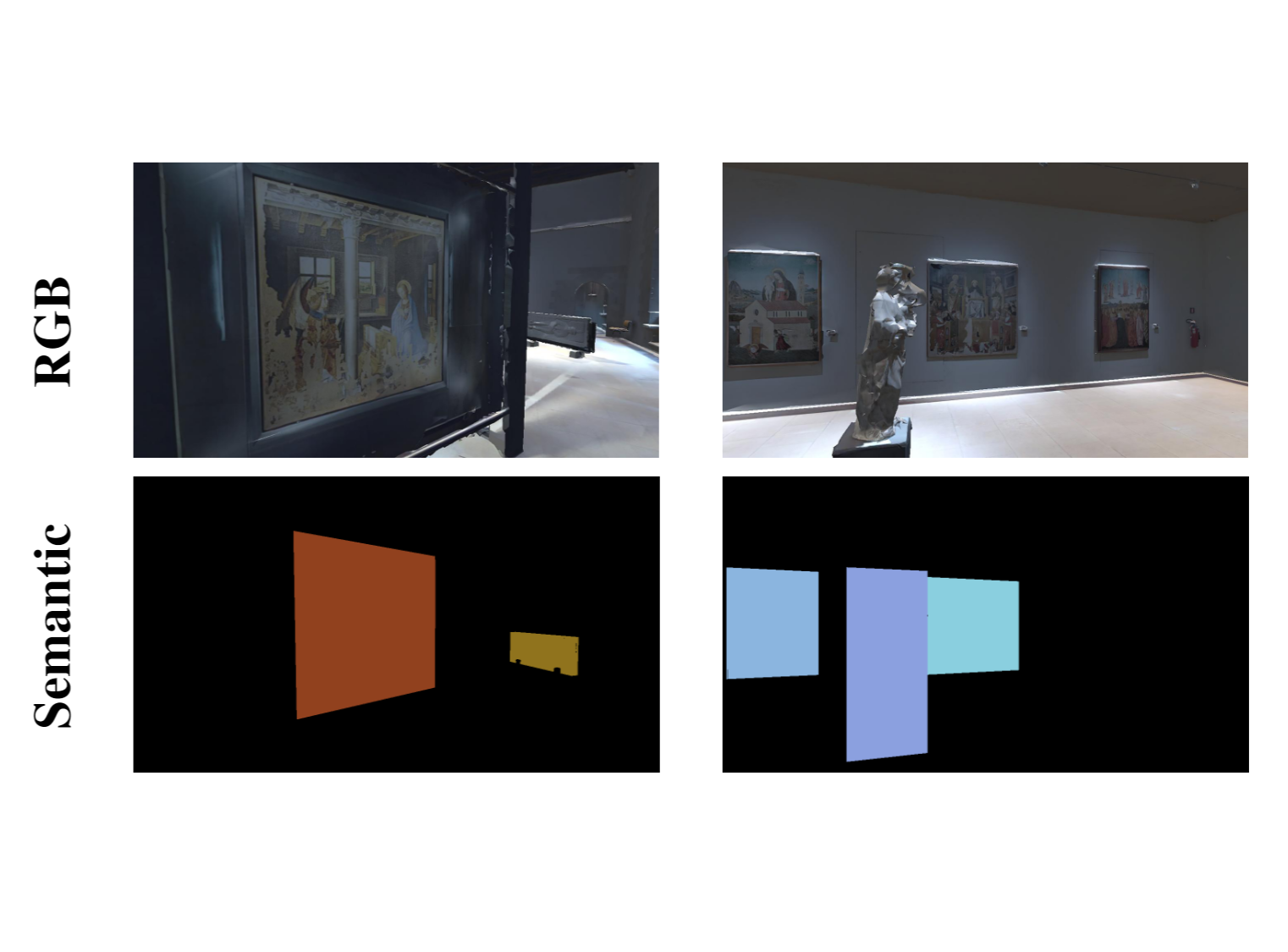

We propose a tool for Unity 3D to generate synthetic dataset to study the IBL problem. The proposed pipeline above comprises two main steps:

- Virtualization of the environment

- Simulation and generation of the dataset

We propose acquired a 3D model of a real cultural heritage and use this one and second one on related work,

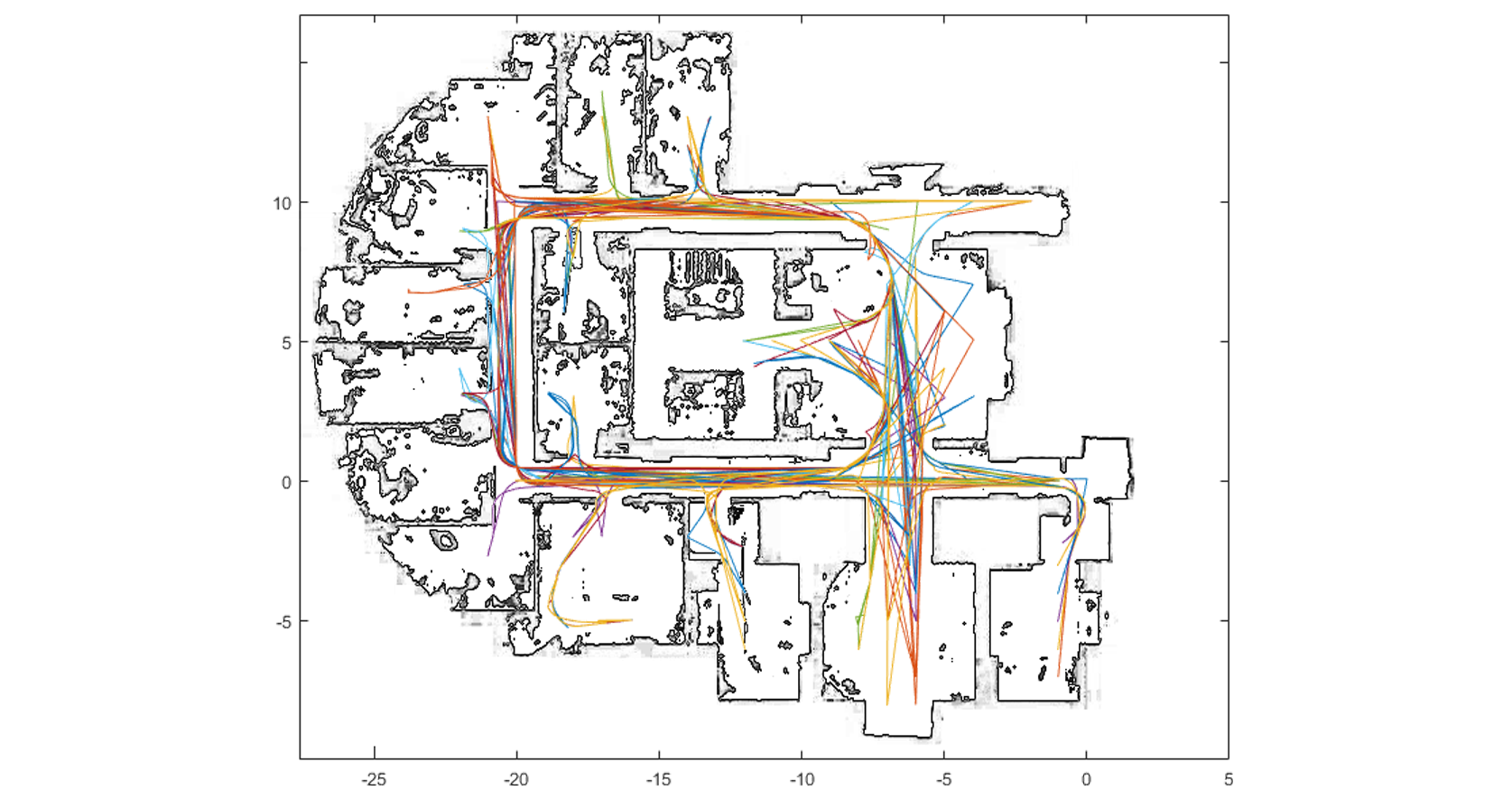

to simulte navigations inside the virtual environment. For the new dataset, we performed experiments on 3DoF localization and artwork

detection.

The experiment of 3DoF localization considering an image retrieval pipeline and using a Triplet Network with an Inception V3 backbone

to learn useful representation for localization task.

We trained the model to learn a representation space and performed a 1-nearest Neighbor search to assign a 3DoF label to a new query

image.

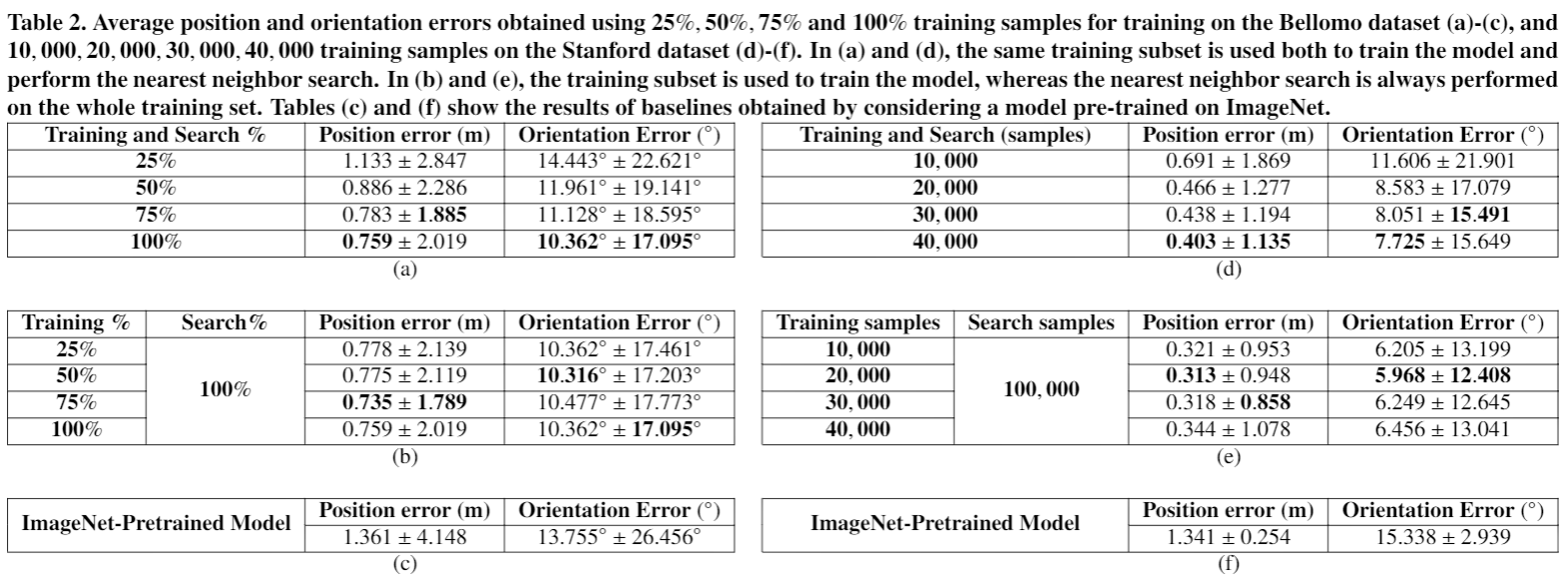

For the Bellomo dataset, at training time, we fed the model using triplets generated by sampling 25%, 50%, 75% and 100% images

from the Training set, whereas at test time we used the navigation used as Test set to extract the representation learned during

training. For each Test image we assigned the label of the nearest nighbor image of the Training set.

For the Stanford dataset, at training time, we fed the model using triplets generated by sampling 10000, 20000, 30000 and 40000 images

from the Training set. The remain part of pipeline is the same

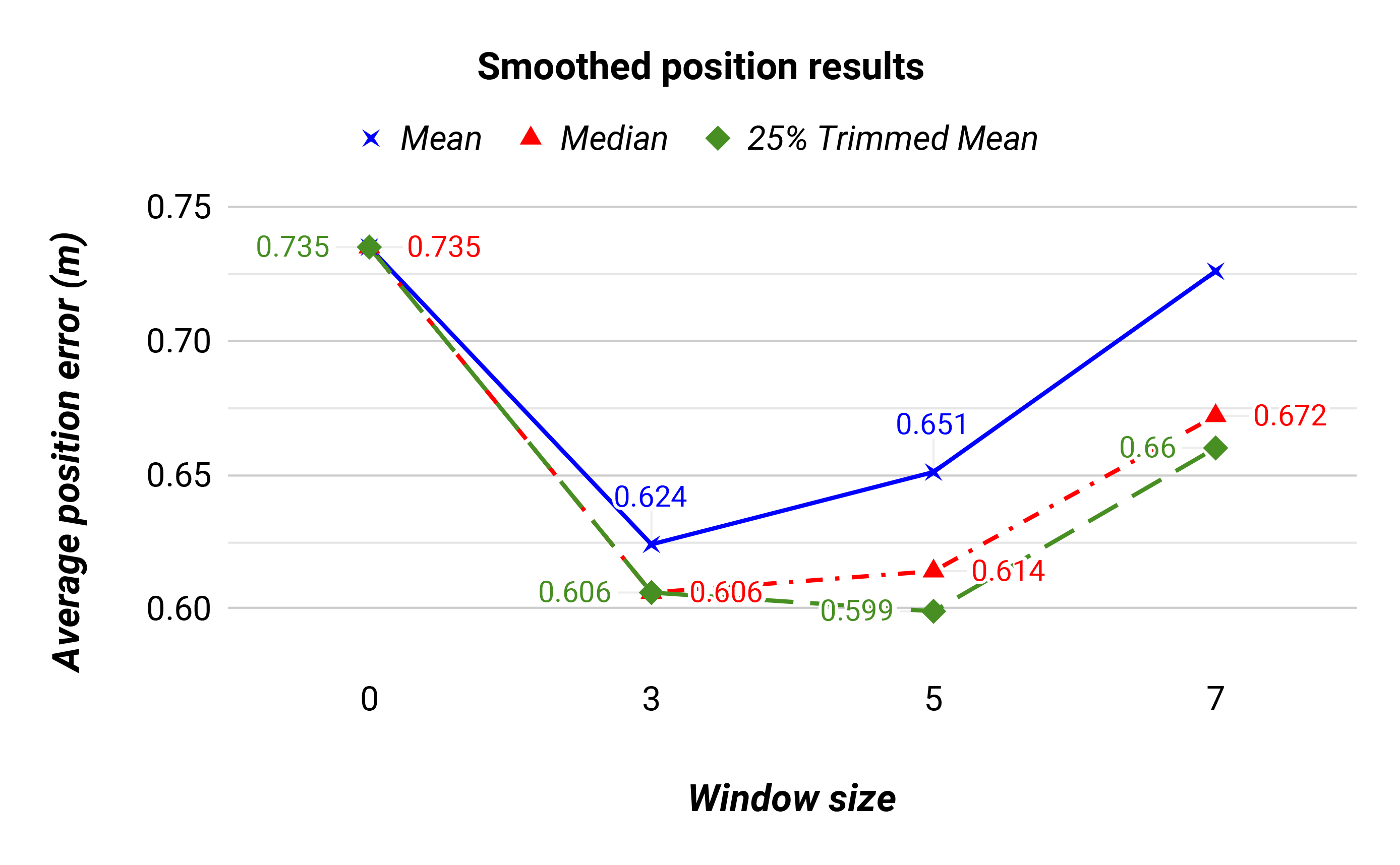

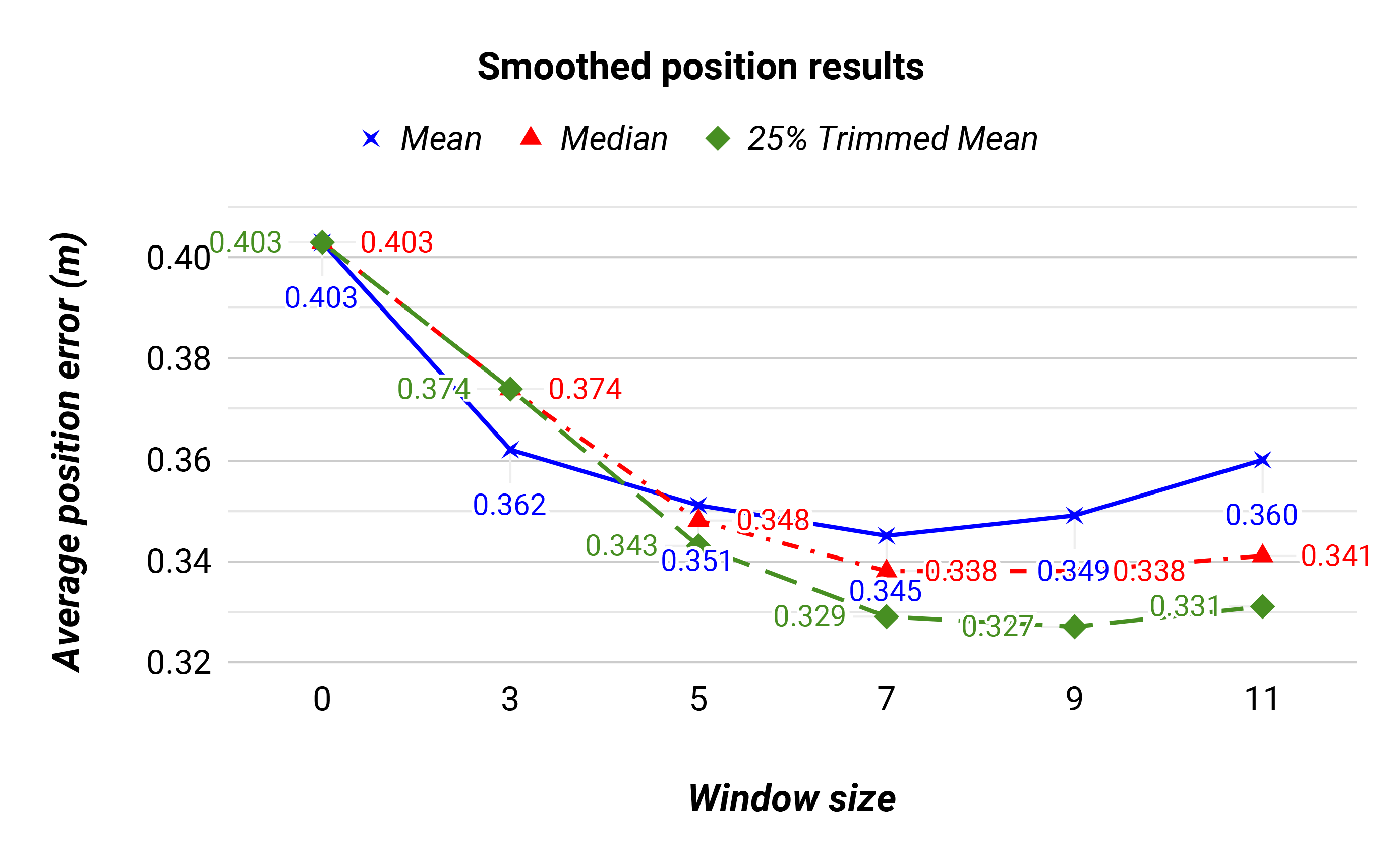

For both the dataset we evaluate post-processing tecniques of temporal smoothing to improve the results on predicted labels.

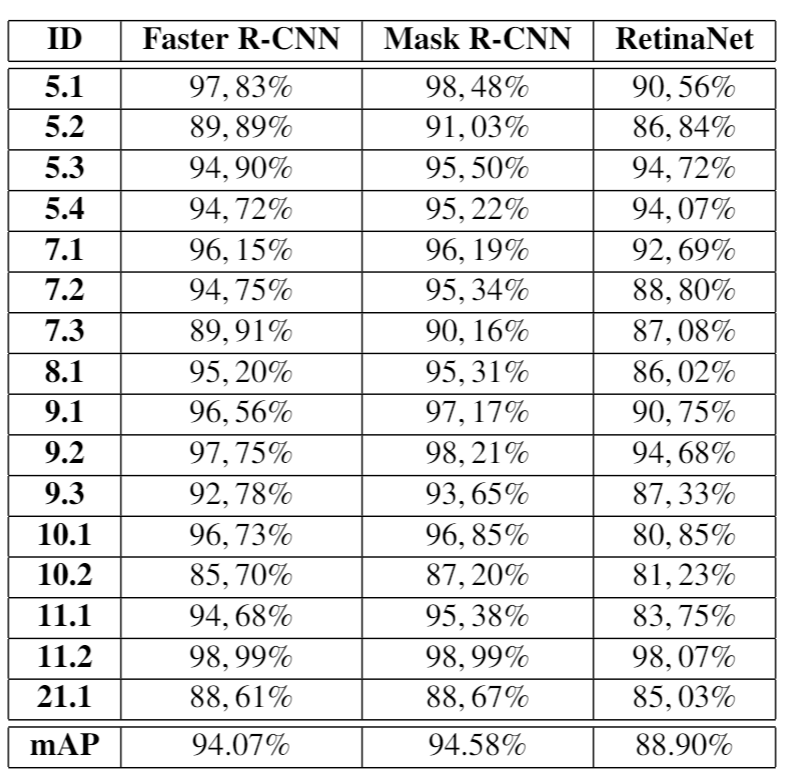

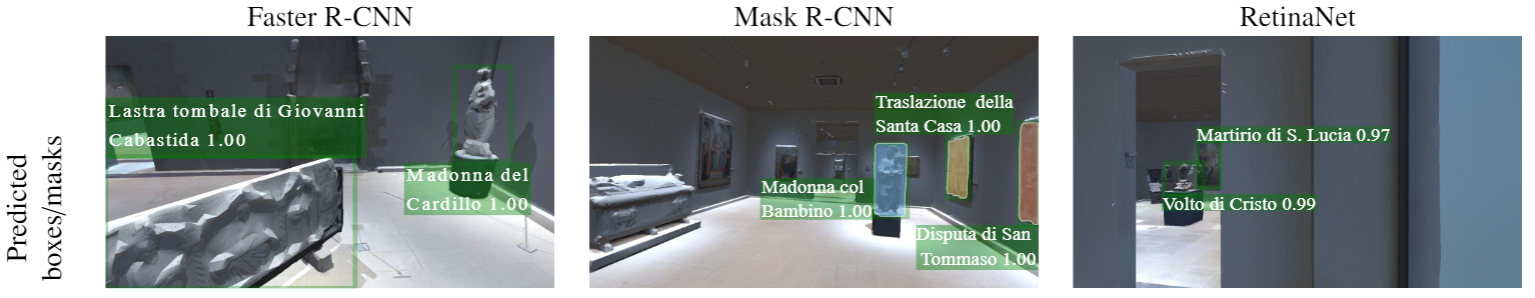

The artwork detection has been performed only on the Bellomo datase.